InterviewToast

Yannis Panagis / February 8, 2022 (2y ago)

InterviewToast

InterviewToast is an interview preparation, feedback and assessment tool that’s designed to help you make sure that at your next interview, you won't be toast!

INSERT GIF WITH BEING TOAST

How does it work? 🤔

This project was built with a friend for the DeveloperWeek 2022 Hackathon in a week and fulfils a couple of functions:

- Interview Preparation Tool: There are a lot of great tools out there to help you prepare for the coding part of the application process. However, a lot of the interviews in the process to get any new job aren't just about code and there aren't a lot of tools out there specifically designed to help you with this part of the process. By leveraging Symbl's conversation intelligence AI in our video application, InterviewToast goes beyond simple speech-to-text and provides real time insights and extracts topics of discussion, sentiment and conversation analytics such as talk-to-listen ratios.

- Interview Feedback Tool: By sourcing questions either directly from the candidate which they want to practice, InterviewToast computes performance ratings and provides quantitative and qualitative feedback on practice interviews for candidates.

- Interview Assessment Tool: InterviewToast uses Agora to provide real-time transcriptions, key-word and profanity detection, and analysis on live interviews. Companies are under increasing pressure from regulators to provide objective feedback after being sued for not hiring candidates. InterviewToast offers a third perspective that leverages real-time data analytics and impartial objective feedback to improve the experience for candidates and interviewers alike. On top of this, the platform aggregates data on the questions that interviewers ask in their interviews to eventually funnel these back into the question database. This way we can make sure that candidates are practicing with the most relevant, up to date, and likely to occur questions.

This project was exciting for a few reasons - it was my first opportunity to experiment with WebRTC, which is am open source project that is used by a lot of applications, in particular video-chat applications, for real time communication.

🚀 How we built it

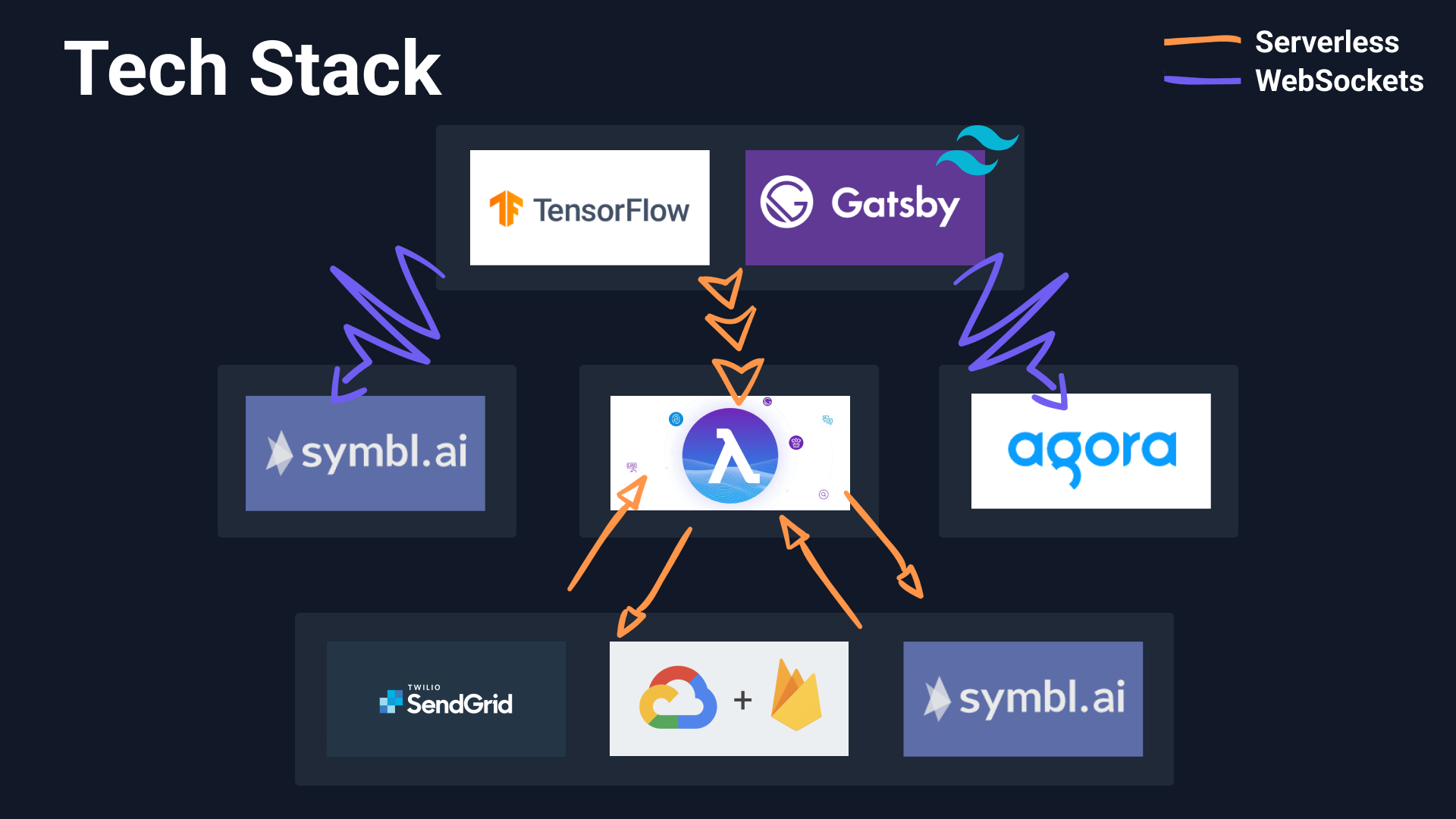

The application was built with:

- Symbl.ai’s for sentiment analysis, topic extraction, conversation analytics, entity and intent recognition, and real-time insights APIs.

- Agora for video calling

- ReactJS as the de-facto JavaScript library for building user interfaces

- GatsbyJS as our blazing fast React framework for performance, scalability, security and accessibility

- Gatsby Cloud Functions to bring an entire backend to InterviewToast - without managing a backend

- Firebase to enable user accounts, SSO, and database.

- TailwindCSS to leverage the benefits and speed of the utility first CSS framework .

- TensorFlowJS Pose-Detection for slouch detection, see in detail below

These pieces all came together nicely in the simple architecture diagram below, which was perfect for a short, time-limited hackathon outside of work hours:

Bonus: Pose-Detection with TensorFlowJS

Did you know that TensorFlow has a JavaScript API? Well it does and its very fun to experiment with…Conversational insights go beyond just the audio in conversations. In fact, only 7% of the interpretation of a conversation is verbal, 38% is vocal and a whopping 55% is /visual/. To make use of this component of the practice sessions, we used the TensorflowJS Pose Detection package’s state-of-the-art models for real-time pose-detection.

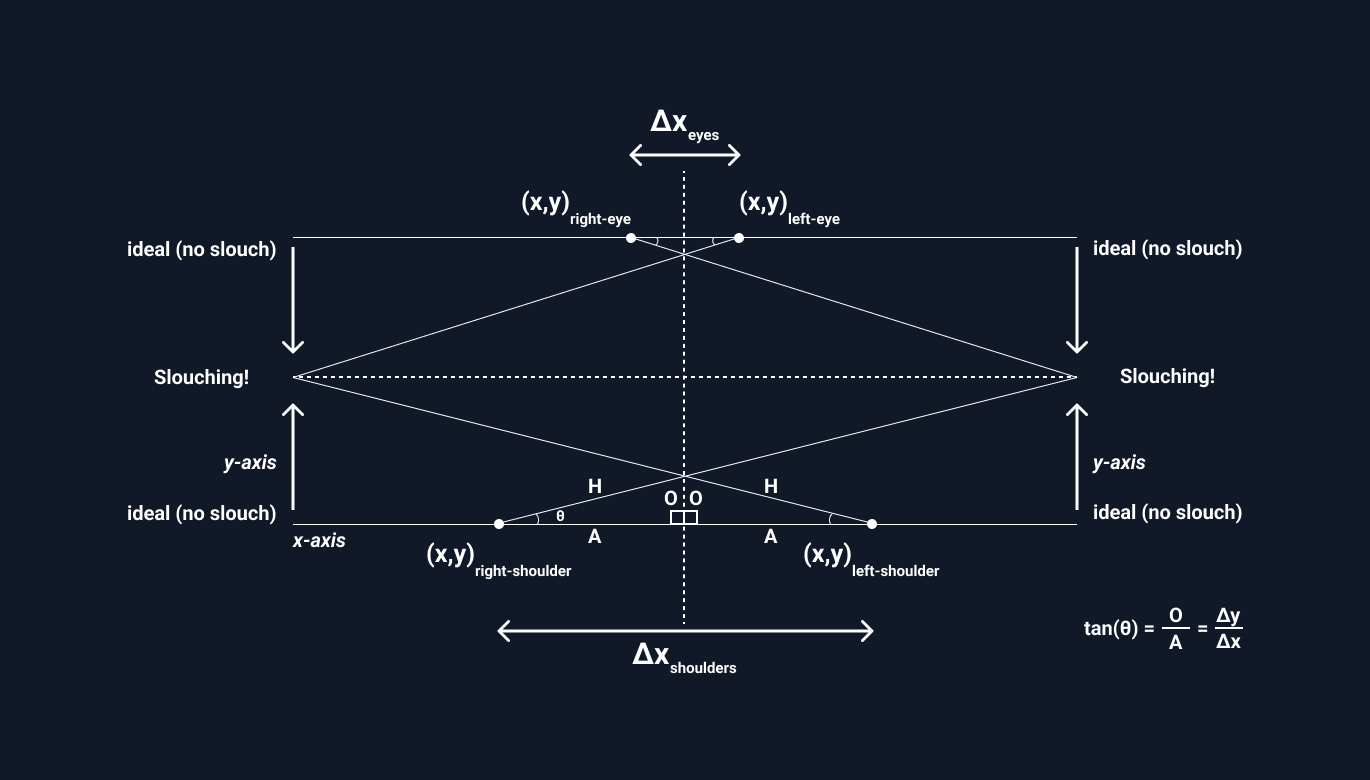

The MoveNet model detected up to 17 keypoints on the body and could run at 50+ fps on modern phones and laptops, without compromising the quality or speed of the video experience. From this, we could estimate the positions of both eyes and both shoulders and use some simple maths to calculate whether or not a candidate is slouching (forwards/backwards/left/right) with reasonable accuracy. In case that verbal description wasn't visual enough, here's a picture of posenet in action, with the keypoints mapped onto a frame of my head and shoulders:

The following diagram explains how we used the angles of displacement from the horizontal axis and the distances between key points from the camera to quantify slouching:

Want to find out more?

If you want to know more about this project I would recommend you check it out either at interviewtoast.com or check out the source code directly on GitHub - interviewtoast.